Removing 100s of blank pages from PDFs

Last updated

13 December 2022

I recently cleared a filing cabinet that served as a dumping ground for years—1000s of pages of documents ranging from the utterly useless to the must-not-lose-ever. A few hours of scanning later, I had 1000s of PDFs with 100s of blank pages scattered throughout.

As usually happens, there was no existing tool for this job but all the parts exist in the Linux tool suite. A StackOverflow answer showed how all these parts could be combined, but I needed something a little more thorough for my problem: batch use on all my PDFs, fine-tuning the "remove this page" criteria, confidence important pages wouldn't accidentally be lost, and an easy way to see where any blank pages remained. I broke things down into three distinct steps:

- gather some per-page metrics summarising what's on each page,

- use visual tools to help decide on a "blank-page" criteria, and finally

- batch re-generate all PDFs minus the blank pages.

1. Gathering per-page PDF metrics

Surprisingly, Ghostscript seemed to be the only established command line tool for PDF analysis. It provides two special output devices that measure the ink coverage on each page. Although not particularly helpfully named, they differ in that:

inkcovreturns the percent of pixels with non-zero C, M, Y, and K values, whereasink_covreturns the mean percent of C, M, Y, and K in each of the pixels.

Wrapping Ghostscript ink coverage analysis with some command line text processing tools, the following script loops over each PDF in a provided folder, dumping ink coverage statistics for each page to an analysis.txt file in /tmp/pdf_trim/:

analyse_pdfs#!/usr/bin/env bash

device="ink_cov"

out="/tmp/pdf_trim/analysis.txt"

[ "$#" -eq 1 ] || { echo "Target directory required as argument"; exit 1; }

in="$(realpath "$1")"

[ -f "$out" ] && rm "$out" || mkdir -p "$(dirname "$out")"

pushd "$in"

find . -name '*.pdf' | while read p; do

gs -o - -sDEVICE="$device" "$p" | grep CMYK | grep -n '' | \

sed 's/:/ /; s|^|'$in' '$(echo "$p" | sed 's|^\./||')' |' | \

tee -a "$out"

done

I found the ink_cov device more useful than inkcov for this problem, as a lot of blank pages would have large sections of negligible, yet still non-zero colour values. Different scanners may produce different results.

Here's some sample results produced by the script:

ben@pc:~$ ./analyse_pdfs /folder/with/my/pdfs

/tmp/pdf_trim/analysis.txt/folder/with/my/pdfs gov/scan0141.pdf 1 4.78717 4.39309 4.14000 4.09732 CMYK OK

/folder/with/my/pdfs gov/scan0144.pdf 1 2.61998 2.42245 2.39633 1.92218 CMYK OK

/folder/with/my/pdfs gov/scan0149.pdf 1 5.67520 5.39294 5.08260 5.06835 CMYK OK

/folder/with/my/pdfs gov/scan0149.pdf 2 5.49347 5.19683 4.90494 4.95353 CMYK OK

/folder/with/my/pdfs gov/scan0149.pdf 3 4.32067 4.19472 3.94193 3.84401 CMYK OK

/folder/with/my/pdfs gov/scan0149.pdf 4 1.80820 1.70653 1.75234 1.39730 CMYK OK

/folder/with/my/pdfs gov/scan0139.pdf 1 4.99882 4.61552 4.28609 4.34633 CMYK OK

/folder/with/my/pdfs gov/scan0148.pdf 1 4.34439 3.99920 3.79326 3.72120 CMYK OK

/folder/with/my/pdfs gov/scan0143.pdf 1 3.24139 3.02562 2.89699 2.81249 CMYK OK

/folder/with/my/pdfs gov/scan0134.pdf 1 2.71977 2.50300 2.35145 2.13092 CMYK OK

/folder/with/my/pdfs tax/scan0093.pdf 1 3.01356 2.68351 2.79580 2.32399 CMYK OK

/folder/with/my/pdfs tax/scan0095.pdf 1 2.67312 2.54626 2.39913 2.39205 CMYK OK

/folder/with/my/pdfs tax/scan0095.pdf 2 0.02228 0.16344 0.16444 0.00000 CMYK OK

/folder/with/my/pdfs tax/scan0098.pdf 1 1.91194 1.76390 1.68544 1.63082 CMYK OK

/folder/with/my/pdfs tax/scan0098.pdf 2 1.92967 1.77983 1.70217 1.65619 CMYK OK

...

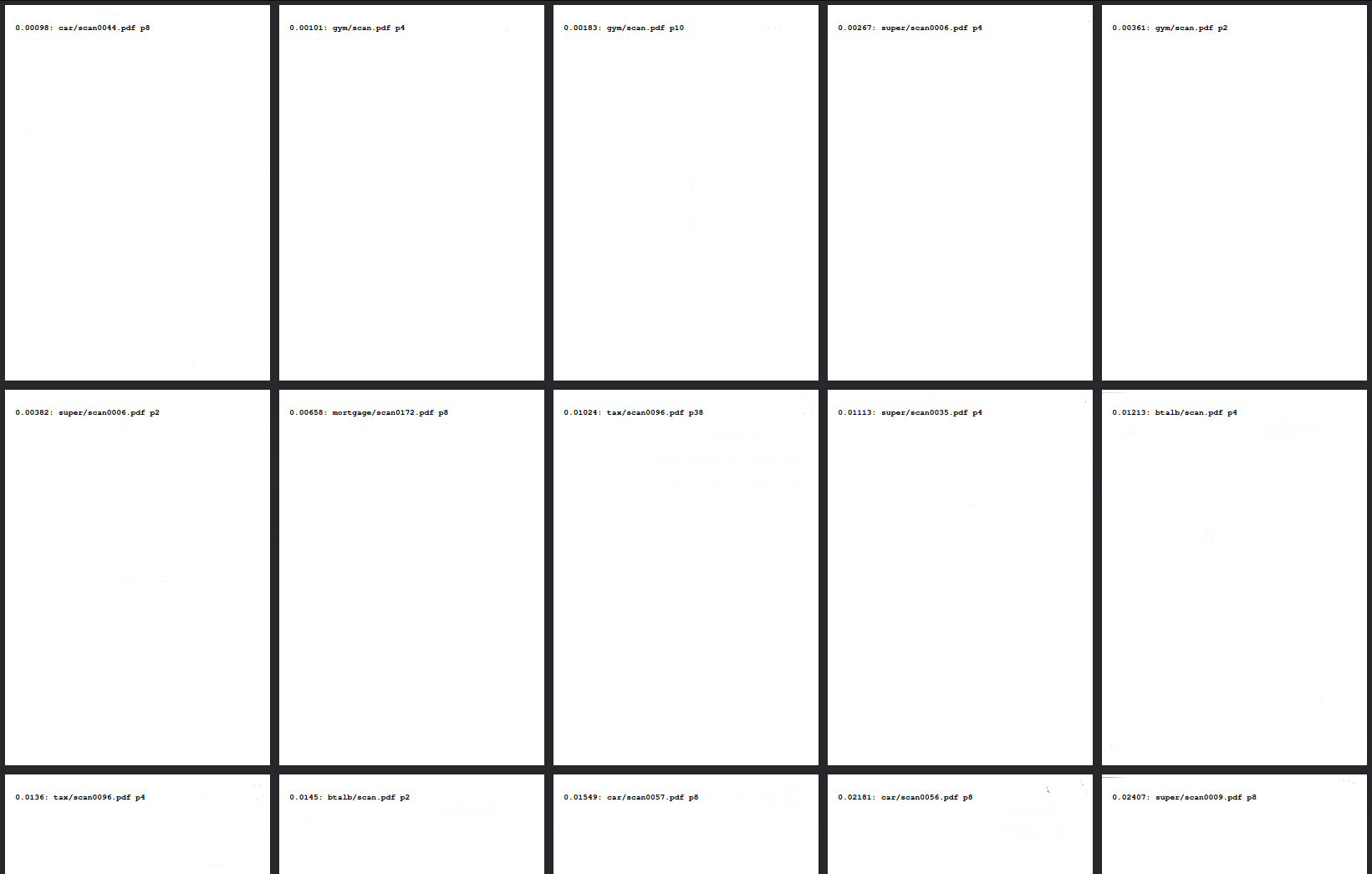

2. Deciding on a "blank-page" criteria

Next, a "blank-page" criteria needs to be found from the metrics available in the analysis.txt file. The challenge here is finding a good criteria that sorts the pages by "level of blankness".

The following script takes the analysis.txt file in /tmp/pdf_trim/ and produces a criteria.txt file with the criteria's value appended to each line, and all lines sorted by this value. It also produces a criteria.pdf with all PDF pages sorted by criteria value and details overlayed on each page.

find_blank_criteria#!/usr/bin/env bash

criteria='$4+$5+$6+$7'

in="/tmp/pdf_trim/analysis.txt"

out="/tmp/pdf_trim/criteria"

tmp_over="/tmp/pdf_trim/over.pdf"

tmp_under="/tmp/pdf_trim/under.pdf"

# Apply the criteria to each line, and then sort

with_criteia="$(cat "$in" | awk '{ print $0, '$criteria' }' | \

sort -n -k 10 | tee "$out.txt")"

# Create an overlay pdf with the criteria values printed

echo "$with_criteia" | awk '{printf "%s: %s p%s\n", $10, $2, $3 }' | \

enscript --no-header --font Courier-Bold18 --lines-per-page 1 -o - | \

ps2pdf - "$tmp_over"

# Create an underlay pdf with the sorted pages by generating PDFtk handle lists

handles="$(paste -d ' ' \

<(echo "$with_criteia" | grep -n '' | sed 's/:.*//' | tr '0-9' 'A-Z') \

<(echo "$with_criteia"))"

pushd "$1"

pdftk $(echo "$handles" | awk '{ printf "%s=%s/%s ", $1, $2, $3 }') \

cat $(echo "$handles" | awk '{ printf "%s%s ", $1, $4}') \

output "$tmp_under"

# Merge them into the final result & remove temporary files

pdftk "$tmp_over" multibackground "$tmp_under" output "$out.pdf"

rm "$tmp_over" "$tmp_under"

The script uses the criteria $4+$5+$6+$7, which sums the C, M, Y, and K channel values from analysis.txt columns 4-7. Although this criteria wasn't perfect, I found it produced the most sensible results for my scans. Different criteria may work better for different scanners.

As an example, here's the command to run the script and some sample output in my criteria.pdf file:

ben@pc:~$ ./find_blank_criteria

3. Batch re-generation of PDFs, minus the blank pages

Lastly, each of the PDFs is re-generated with pages removed that have a criteria value below a decided threshold.

The script below takes the criteria.txt file and uses a criteria threshold value to iteratively generate each trimmed PDF file in a selected output directory.

remove_blanks#!/usr/bin/env bash

threshold=1.59

input="/tmp/pdf_trim/criteria.txt"

[ "$#" -eq 1 ] || { echo "Output directory required as argument"; exit 1; }

out="$(realpath "$1")"

in_list="$(cat $input)"

out_list="$(cat "$input" | awk '$10 >'$threshold' {print}' | \

sort -k 2,2 -k 3,3n)"

in_files="$(echo "$in_list" | cut -d ' ' -f 1,2 | sort -u )"

out_files="$(echo "$out_list" | cut -d ' ' -f 1,2 | sort -u)"

echo "$out_files" | while read f; do

dest="$(echo "$f" | sed 's|[^ ]* |'$out'/|; s/\.pdf$/_trimmed\.pdf/')"

echo "$dest"

mkdir -p "$(dirname "$dest")"

pdftk "$(echo "$f" | sed 's| |/|')" \

cat $(echo "$out_list" | grep "$f" | cut -d ' ' -f 3 | tr '\n' ' ' | \

sed 's/ $//') \

output "$dest"

done

printf "\nTrimmed %s pages with criteria value below %s\n" \

"$(($(echo "$in_list" | wc -l) - $(echo "$out_list" | wc -l)))" "$threshold"

printf "All pages were skipped from the following files:\n%s\n" \

"$(comm -23 <(echo "$in_files") <(echo "$out_files") | sed 's/^/\t/; s| |/|')"

The script is used as below:

ben@pc:~$ ./remove_blanks /folder/for/trimmed/pdfs

In an ideal world the criteria value threshold would be perfect, but in reality some non-blank pages will appear before blank pages. I chose a threshold value that had no non-blank pages below it, then used analysis.pdf to find the remaining blank pages, and trimmed them out using a manual command like:

ben@pc:~$ pdftk input.pdf cat 1-9 11-end output.pdf

Conclusion

And there we have it: three relatively terse but powerful bash scripts and some visual feedback-based criteria tuning later I've got the 1000s of PDFs minus the 100s of blank pages.

Hope that's helped. If you've got any suggestions for doing this better, please let me know. I know in an ideal world I'd prefer some better metrics than what's provided by Ghostscript devices. But I'm going to sign off from this problem here.

Let me know below if you liked this content, or found it valuable, using the buttons below.

Like this?

© Ben Talbot. All rights reserved.