BenchBot environments for active robotics (BEAR): Simulated data for active scene understanding research

2 March 2022

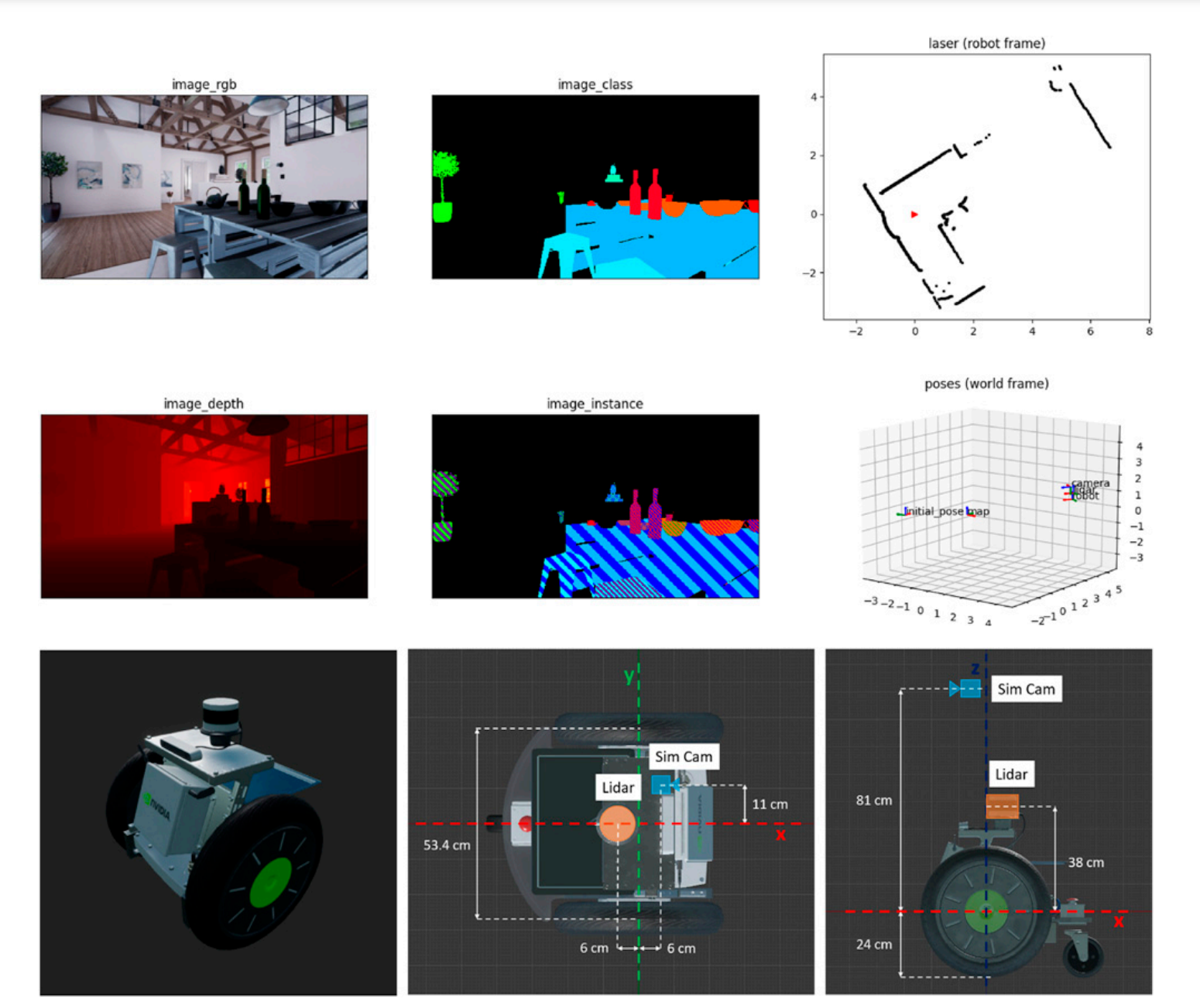

We present a platform to foster research in active scene understanding, consisting of high-fidelity simulated environments and a simple yet powerful API that controls a mobile robot in simulation and reality. In contrast to static, pre-recorded datasets that focus on the perception aspect of scene understanding, agency is a top priority in our work. We provide three levels of robot agency, allowing users to control a robot at varying levels of difficulty and realism. While the most basic level provides pre-defined trajectories and ground-truth localisation, the more realistic levels allow us to evaluate integrated behaviours comprising perception, navigation, exploration and SLAM. In contrast to existing simulation environments, we focus on robust scene understanding research using our environment interface (BenchBot) that provides a simple API for seamless transition between the simulated environments and real robotic platforms. We believe this scaffolded design is an effective approach to bridge the gap between classical static datasets without any agency and the unique challenges of robotic evaluation in reality. Our BenchBot Environments for Active Robotics (BEAR) consist of 25 indoor environments under day and night lighting conditions, a total of 1443 objects to be identified and mapped, and ground-truth 3D bounding boxes for use in evaluation.

Supporters

© Ben Talbot. All rights reserved.