The Abstract Map

Commenced

17 June 2013

Last updated

19 September 2020

17 June 2013

Began PhD, as part of the Human Cues for Robot Navigation Australian Research Council (ARC) Discovery project

30 May 2015

First version of the abstract map presented as part of a floor plan-based navigation system at ICRA 2015

16 July 2015

Concept of an abstract map built from spatial descriptions presented to the Workshop on Model Learning for Human-Robot Communication at RSS 2015

2 December 2015

Navigation using an abstract map built from natural language phrases presented at ACRA 2015, and won best student paper award

21 May 2016

Demonstrated the abstract map navigating a robot through real built environments for ICRA 2016 paper

21 September 2018

Thesis published on integrating symbolic spatial information in robot navigation, with the abstract map as the core contribution

30 August 2020

Code for the final version of the abstract map publicly released (v1.1.0)

1 September 2020

Navigation studies comparing abstract map-based robot navigation with human performance in real environments published in IEE TCDS, as the conclusion of this work

19 September 2020

Research results reported nationally, including coverage by Fairfax media

The Abstract Map was developed throughout my PhD thesis, as part of the Human Cues for Robot Navigation ARC Discovery project (DP140103216). By using the abstract map, a robot navigation system is able to use symbols to purposefully navigate in unseen spaces.

Work on the abstract map resulted in five research publications, a doctoral thesis, and four different software implementations. More information is available on these outputs through the links below, except for the first three software implementations which where superseded by the final open source software release.

The project started by exploring how symbols from human navigation could be transformed into spatial representations usable in robot navigation. We focused solely on metric floor plans, the human navigation cue that shared the most similarities with the spatial representations typically employed in robot navigation. The work produced an abstract map that used least-squares optimisation to estimate the 2D homogeneous transform (scaling, rotation, and translation) between the coordinate frame of the floor plan and the map built from the robot's observations. The work, shown below, was published at the 2015 International Conference on Robotics and Automation (ICRA).

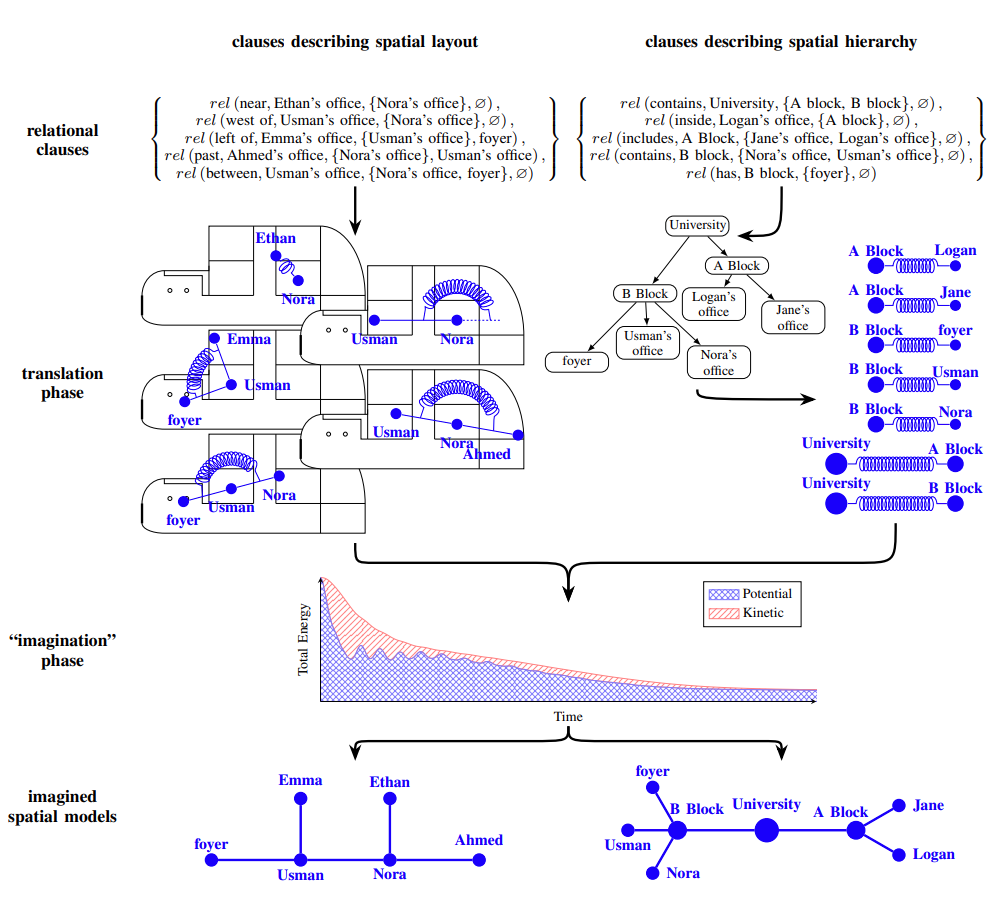

We next progressed to looking at how a robot could use structured semantic descriptions like "the kitchen is past the robotics lab" to navigate in unseen spaces. In this work, a novel malleable spatial model was defined that would be the core of all future work. The novel spatial model converted semantic descriptions into simulated springs and point-masses, and used the dynamics of the system to imagine spatial models from scratch. The malleable nature of the spring dynamics allowed the spatial model to evolve as the robot gained new symbolical spatial information while navigating in the unseen environment. A best student paper award was given to the work at the 2015 Australasian Conference on Robotics and Automation (ACRA), and an extension of the work was published at the 2016 International Conference on Robotics and Automation (ICRA).

Applying the novel spatial model more generically presented some challenges, with ensuring stable dynamics under numerical integration techniques proving troublesome. A C++11/14 implementation was created to thoroughly profile issues, and explore potential solutions, without interference from the synchronous rendering pipeline required in MATLAB. Conclusions and insights from the C++ version ensured the spring-based dynamics underpinning the abstract map could be generalised from the semantic descriptions used in previous work to all human navigation cues. My PhD thesis on how a robot can use human navigation symbols to navigate unseen spaces was finished in 2018, and described the use of a dynmics-inspired abstract map with all symbolic spatial information types for the first time.

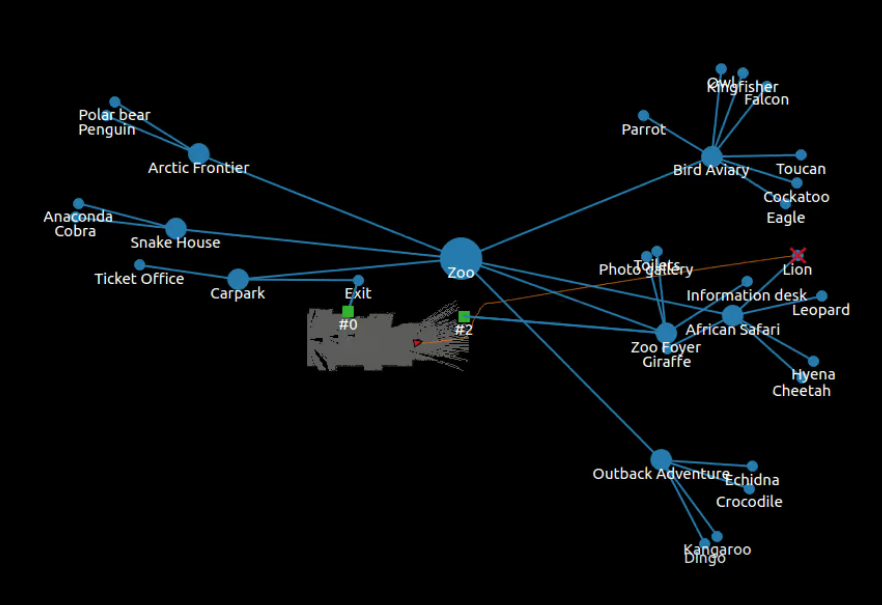

The project culminated with publication in the IEEE Transactions on Cognitive and Developmental Systems, presenting a comparative study between human performance and a robot navigation system using an open source python implementation of the abstract map. The comparative study asked humans and robot to find a location in a simulated zoo, an environment they had never visited before. Human participants were outperformed by a robot using the abstract map by 11.5%, although it is important not to inflate the significance of the number given the sample size.

What is important, is the journal conclude the project by demonstrating what we set out to do: a robot navigation system that could using human navigation cues to navigate unseen built environments with performance that rivalled humans. The result was a great conclusion to the project, and received media coverage in QUT's The LABS publication and the local Brisbane Times newspaper (links below).

Related Links

© Ben Talbot. All rights reserved.